Projects

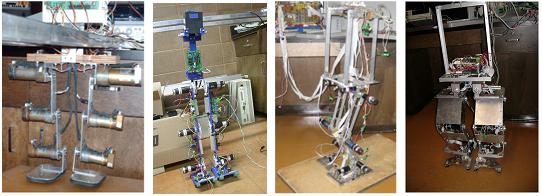

Biped Walking Robots

Four biped walking robots have been developed at Lakehead University. The fist robot developed had 6 DOF and was suspended from a track above it. The second robot developed had 7 DOF with 6 DOF on the legs and a seventh controlling a counterweight that allowed it to lift its feet without tipping over. The third robot developed had 10 DOF and could passively walk. The control of this robot was accomplished by using a PD controller with gain scheduling and gravity compensation terms attained through trial and error. The latest robot has 12 DOF and is controlled via computed torque scheme with active balance in the frontal plane.

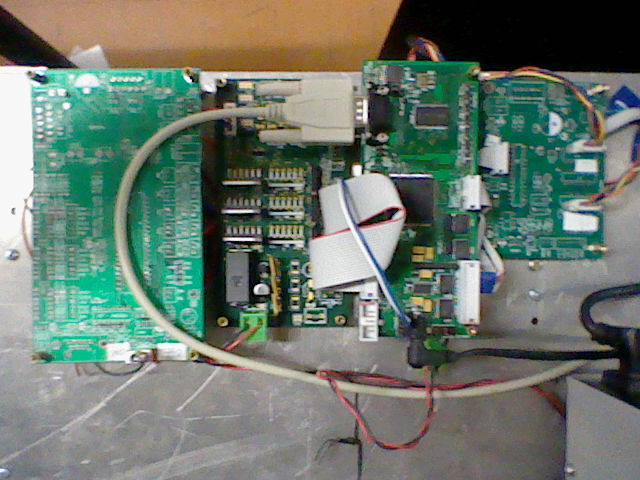

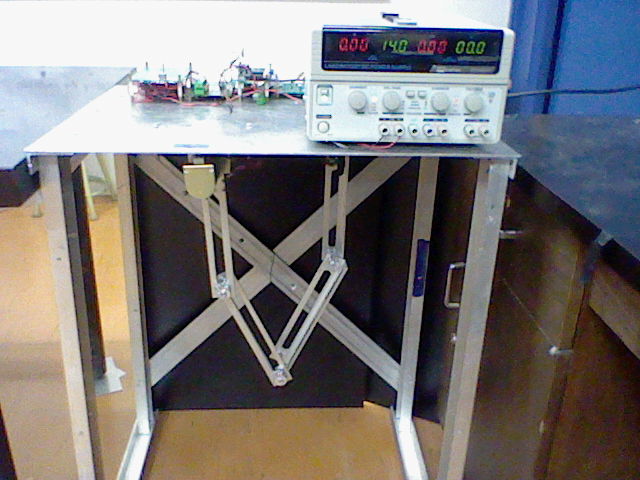

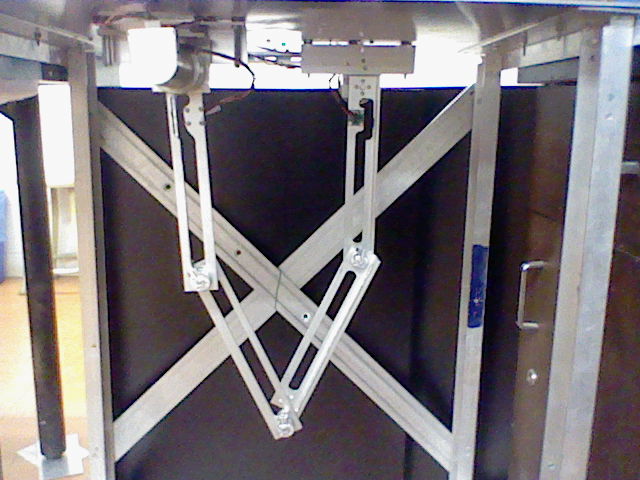

Parallel Robots

The current parallel robot is a planar two degrees of freedom system. It employs eight various controllers namely: adaptive and non-adaptive PD and backstepping controllers, a generic fuzzy logic controller that utilizes a singleton fuzzifier, the product inference engine and a centre average defuzzifier, an indirect adaptive fuzzy controller, a direct adaptive fuzzy controller and a fuzzy adaptive backstepping controller. The purpose of the implementation of such a wide assortment of controllers is to determine which controller would best suit the desired movements of the system.

Autonomously Navigating Robot, Using Image Proccessing

A differential steering mobile robot is modified and fitted with optical sensor (The Microsoft Kinect), microcontroller, motor drivers, and motors. The robotic system is designed to autonomously navigate a given environment, primarily based on the colour and depth information provided by the optical sensor. Image processing is used to detect or track a target through the sequential 3-dimentional frames; optical flow is the main algorithm used for this task. Fuzzy and PID controllers are used to control the 2-degree of freedom given to the base of the optical sensor so that it is possible to track outside the frame boundaries limited by the optical sensor. Navigation algorithm is modeled based on a map-based approach and a non-map-based approach. Map-based approach simulates a navigational motion, assuming that the local area map, position of the robot, and the orientation of the robot are available. Modified A-Star and Goal-Based Vector Field algorithms were used for this purpose. The non-map-based approach enables the robot to follow a target and also navigate to a target in a simple environment; the target must be always visible. An Intelligence engine was also designed to learn the motion behaviors of this non-linear robot using a feed-forward artificial neural network (FFANN) with back propagation. The FFANN is trained to give the correct PWM values to the wheels based on the navigational motion desired by the navigational algorithm.